Normal-Normal model

LG

2021-03-09

normalnormal-model.RmdThis vignette introduces the Normal-Normal model. It is designed to be a benchmark.

Model

Consider Normal samples \(X_i\) from \(m\) different sources. Each source is sampled \(n\) times.

Dirichlet parameter are also assumed to be sampled from Dirichlet distributions.

- \(\boldsymbol{X}_{ij} \sim N(\mu_i, \sigma_i)\) iid \(\forall j = 1, \ldots, n\) with \(i = 1, \ldots, m\)

- \(\mu_i \sim N(\mu_0^{(\mu)}, \sigma_0^{(\mu)}) \quad \forall i = 1, \ldots, m\)

- \(\sigma_i \sim N(\mu_0^{(\sigma)}, \sigma_0^{(\sigma)}) \quad \forall i = 1, \ldots, m\)

We assume that \(\mu_0^{(\mu)}, \sigma_0^{(\mu)}, \mu_0^{(\sigma)}, \sigma_0^{(\sigma)}\) are known.

Data

The Normal-Normal model is implemented in rsamplestudy.

Let’s generate some data:

library(dplyr)

#>

#> Attaching package: 'dplyr'

#> The following objects are masked from 'package:stats':

#>

#> filter, lag

#> The following objects are masked from 'package:base':

#>

#> intersect, setdiff, setequal, union

library(ggplot2)

library(rsamplestudy)

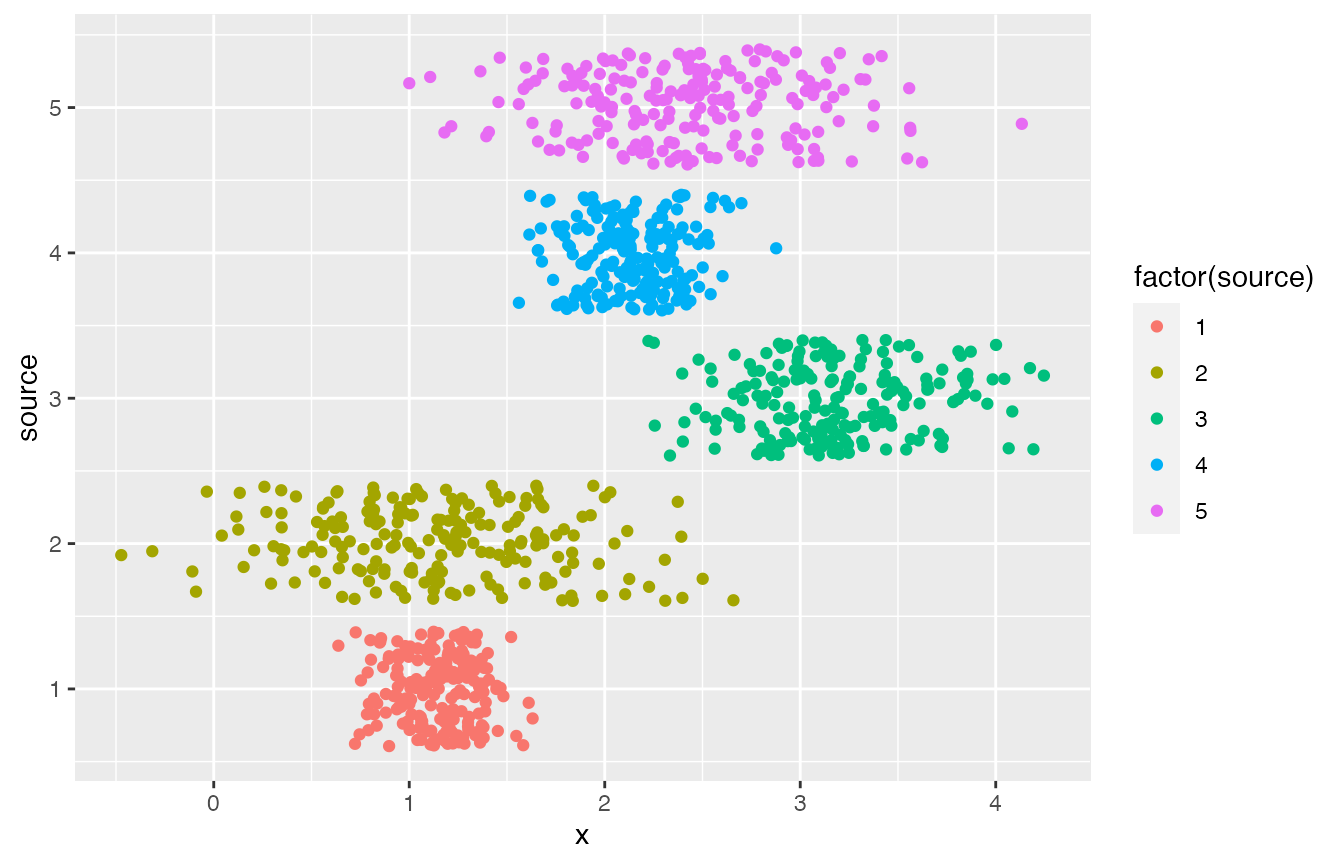

n <- 200

m <- 5

list_pop <- rsamplestudy::fun_rnorm_population(n, m)

list_pop$df_pop %>%

ggplot() +

geom_point(aes(x = x, y = source, col = factor(source)), position = 'jitter')

list_pop$df_sources

#> # A tibble: 5 x 3

#> source mu sigma

#> <int> <dbl> <dbl>

#> 1 1 1.13 0.209

#> 2 2 1.15 0.547

#> 3 3 3.18 0.426

#> 4 4 2.15 0.246

#> 5 5 2.47 0.561

list_pop$list_hyper

#> $m_mu

#> [1] 2.287247

#>

#> $m_sigma

#> [1] 1.196772

#>

#> $s_mu

#> [1] -0.6942925

#>

#> $s_sigma

#> [1] 0.412293Generate two sets of samples, under two different hypotheses:

k_ref <- 10

k_quest <- 10

source_ref <- 1

source_same <- source_ref

source_diff <- 3 # single questioned different source

list_samples_same <- rsamplestudy::make_dataset_splits(list_pop$df_pop, k_ref, k_quest, source_ref = source_ref, source_quest = source_same)

list_samples_diff <- rsamplestudy::make_dataset_splits(list_pop$df_pop, k_ref, k_quest, source_ref = source_ref, source_quest = source_diff)Organize data, and elicit the hyperprior from the background data (assume vague hyperpriors):

list_data_same <- rstanBF::stanBF_prepare_rsamplestudy_data(list_pop, list_samples_same)

list_data_diff <- rstanBF::stanBF_prepare_rsamplestudy_data(list_pop, list_samples_diff)

list_hyper <- rstanBF::stanBF_elicit_hyperpriors(list_samples_same$df_background, 'NormNorm', 'vague')Perform the NUTS sampling:

n.iter <- 5000

n.burnin <- 1000

n.cores <- 3

n.chains <- n.cores

obj_StanBF_same <- rstanBF::compute_BF_Stan(list_data_same, 'NormNorm', list_hyper, n.iter = n.iter, n.burnin = n.burnin, n.cores = n.cores, n.chains = n.chains, silent = TRUE)

obj_StanBF_diff <- rstanBF::compute_BF_Stan(list_data_diff, 'NormNorm', list_hyper, n.iter = n.iter, n.burnin = n.burnin, n.cores = n.cores, n.chains = n.chains, silent = TRUE)

obj_StanBF_same

#> stanBF object containing posterior samples from H1, H2.

#> Model: Normal-Normal

#> Obtained BF: 1697753

#> Ran with 3 chains, 5000 HMC iterations.

obj_StanBF_diff

#> stanBF object containing posterior samples from H1, H2.

#> Model: Normal-Normal

#> Obtained BF: 1.301013e-05

#> Ran with 3 chains, 5000 HMC iterations.More details on the Stan outputs:

obj_StanBF_same$stanfit

#> $H1

#> Inference for Stan model: stan_NormNorm_H1.

#> 3 chains, each with iter=5000; warmup=1000; thin=1;

#> post-warmup draws per chain=4000, total post-warmup draws=12000.

#>

#> mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff

#> mu_ref 1.09 0.00 0.04 1.00 1.06 1.09 1.12 1.18 7517

#> sigma_ref 0.19 0.00 0.03 0.14 0.17 0.19 0.21 0.27 6888

#> sim_mu_ref 2.47 0.93 100.43 -194.84 -64.44 2.77 69.74 198.64 11659

#> sim_sigma_ref 79.93 0.55 60.26 3.48 31.20 68.20 115.00 223.64 12182

#> sim_d_ref 1.04 1.30 142.27 -284.03 -85.48 1.73 88.28 280.44 11962

#> pred_d_ref 1.09 0.00 0.20 0.69 0.96 1.09 1.22 1.49 11329

#> lp__ -7.95 0.02 1.05 -10.80 -8.36 -7.62 -7.19 -6.91 4637

#> Rhat

#> mu_ref 1

#> sigma_ref 1

#> sim_mu_ref 1

#> sim_sigma_ref 1

#> sim_d_ref 1

#> pred_d_ref 1

#> lp__ 1

#>

#> Samples were drawn using NUTS(diag_e) at Tue Mar 9 09:14:30 2021.

#> For each parameter, n_eff is a crude measure of effective sample size,

#> and Rhat is the potential scale reduction factor on split chains (at

#> convergence, Rhat=1).

#>

#> $H2

#> Inference for Stan model: stan_NormNorm_H2.

#> 3 chains, each with iter=5000; warmup=1000; thin=1;

#> post-warmup draws per chain=4000, total post-warmup draws=12000.

#>

#> mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff

#> mu_ref 1.11 0.00 0.06 1.00 1.07 1.11 1.14 1.22 9360

#> sigma_ref 0.17 0.00 0.05 0.10 0.14 0.16 0.19 0.29 7665

#> mu_quest 1.07 0.00 0.08 0.91 1.02 1.07 1.13 1.24 7677

#> sigma_quest 0.25 0.00 0.07 0.15 0.20 0.24 0.28 0.43 7074

#> sim_mu_ref -1.24 0.96 100.48 -197.19 -68.85 -1.96 66.14 196.53 10924

#> sim_sigma_ref 79.45 0.55 59.72 3.43 31.57 67.96 114.67 222.73 11592

#> sim_mu_quest 0.20 0.93 99.99 -192.64 -67.50 -0.70 67.63 197.41 11549

#> sim_sigma_quest 80.28 0.55 60.93 2.73 32.21 67.75 115.46 226.50 12178

#> sim_d_ref -0.41 1.36 142.25 -280.41 -87.49 -1.17 87.24 285.12 10973

#> sim_d_quest 1.46 1.32 143.05 -286.81 -86.95 0.42 88.71 286.26 11805

#> pred_d_ref 1.11 0.00 0.19 0.73 0.99 1.11 1.22 1.48 10935

#> pred_d_quest 1.08 0.00 0.27 0.53 0.91 1.08 1.25 1.62 11669

#> lp__ -21.84 0.02 1.54 -25.76 -22.61 -21.50 -20.70 -19.87 4920

#> Rhat

#> mu_ref 1

#> sigma_ref 1

#> mu_quest 1

#> sigma_quest 1

#> sim_mu_ref 1

#> sim_sigma_ref 1

#> sim_mu_quest 1

#> sim_sigma_quest 1

#> sim_d_ref 1

#> sim_d_quest 1

#> pred_d_ref 1

#> pred_d_quest 1

#> lp__ 1

#>

#> Samples were drawn using NUTS(diag_e) at Tue Mar 9 09:14:32 2021.

#> For each parameter, n_eff is a crude measure of effective sample size,

#> and Rhat is the potential scale reduction factor on split chains (at

#> convergence, Rhat=1).

obj_StanBF_diff$stanfit

#> $H1

#> Inference for Stan model: stan_NormNorm_H1.

#> 3 chains, each with iter=5000; warmup=1000; thin=1;

#> post-warmup draws per chain=4000, total post-warmup draws=12000.

#>

#> mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff

#> mu_ref 2.19 0.00 0.25 1.70 2.03 2.19 2.36 2.70 7447

#> sigma_ref 1.12 0.00 0.20 0.81 0.98 1.09 1.23 1.58 7281

#> sim_mu_ref -0.41 0.93 100.57 -196.93 -68.39 -0.69 67.03 197.12 11648

#> sim_sigma_ref 80.93 0.56 60.62 3.18 32.83 69.40 116.19 225.55 11684

#> sim_d_ref -0.69 1.32 141.43 -282.72 -87.36 0.08 86.50 272.43 11558

#> pred_d_ref 2.20 0.01 1.17 -0.13 1.44 2.21 2.95 4.53 10868

#> lp__ -41.51 0.01 1.02 -44.20 -41.92 -41.20 -40.78 -40.50 5088

#> Rhat

#> mu_ref 1

#> sigma_ref 1

#> sim_mu_ref 1

#> sim_sigma_ref 1

#> sim_d_ref 1

#> pred_d_ref 1

#> lp__ 1

#>

#> Samples were drawn using NUTS(diag_e) at Tue Mar 9 09:14:39 2021.

#> For each parameter, n_eff is a crude measure of effective sample size,

#> and Rhat is the potential scale reduction factor on split chains (at

#> convergence, Rhat=1).

#>

#> $H2

#> Inference for Stan model: stan_NormNorm_H2.

#> 3 chains, each with iter=5000; warmup=1000; thin=1;

#> post-warmup draws per chain=4000, total post-warmup draws=12000.

#>

#> mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff

#> mu_ref 1.21 0.00 0.08 1.06 1.16 1.21 1.25 1.36 7315

#> sigma_ref 0.23 0.00 0.07 0.14 0.18 0.22 0.26 0.39 6579

#> mu_quest 3.18 0.00 0.13 2.91 3.09 3.17 3.26 3.45 8651

#> sigma_quest 0.41 0.00 0.12 0.25 0.33 0.39 0.46 0.69 7623

#> sim_mu_ref 0.32 0.91 100.59 -196.72 -67.28 0.02 67.83 198.17 12133

#> sim_sigma_ref 80.40 0.55 60.40 3.35 31.81 68.68 116.72 223.96 11976

#> sim_mu_quest 0.63 0.93 100.64 -195.99 -67.32 0.58 68.27 201.36 11780

#> sim_sigma_quest 80.44 0.56 60.93 3.11 31.79 68.24 115.19 225.33 11887

#> sim_d_ref -0.23 1.29 141.78 -281.99 -85.88 -0.99 85.11 285.31 12137

#> sim_d_quest 1.54 1.29 143.20 -277.84 -88.42 0.58 87.72 289.70 12331

#> pred_d_ref 1.21 0.00 0.25 0.72 1.05 1.21 1.36 1.71 12119

#> pred_d_quest 3.18 0.00 0.45 2.29 2.90 3.18 3.45 4.07 12004

#> lp__ -28.82 0.03 1.58 -32.87 -29.57 -28.47 -27.66 -26.88 3785

#> Rhat

#> mu_ref 1

#> sigma_ref 1

#> mu_quest 1

#> sigma_quest 1

#> sim_mu_ref 1

#> sim_sigma_ref 1

#> sim_mu_quest 1

#> sim_sigma_quest 1

#> sim_d_ref 1

#> sim_d_quest 1

#> pred_d_ref 1

#> pred_d_quest 1

#> lp__ 1

#>

#> Samples were drawn using NUTS(diag_e) at Tue Mar 9 09:14:41 2021.

#> For each parameter, n_eff is a crude measure of effective sample size,

#> and Rhat is the potential scale reduction factor on split chains (at

#> convergence, Rhat=1).Post-processing

Using general post-processing utils in bayesplot package:

suppressPackageStartupMessages(library(rstan))

library(bayesplot)

#> This is bayesplot version 1.8.0

#> - Online documentation and vignettes at mc-stan.org/bayesplot

#> - bayesplot theme set to bayesplot::theme_default()

#> * Does _not_ affect other ggplot2 plots

#> * See ?bayesplot_theme_set for details on theme setting

results_same_H1 <- obj_StanBF_same$stanfit$H1 %>% As.mcmc.list()

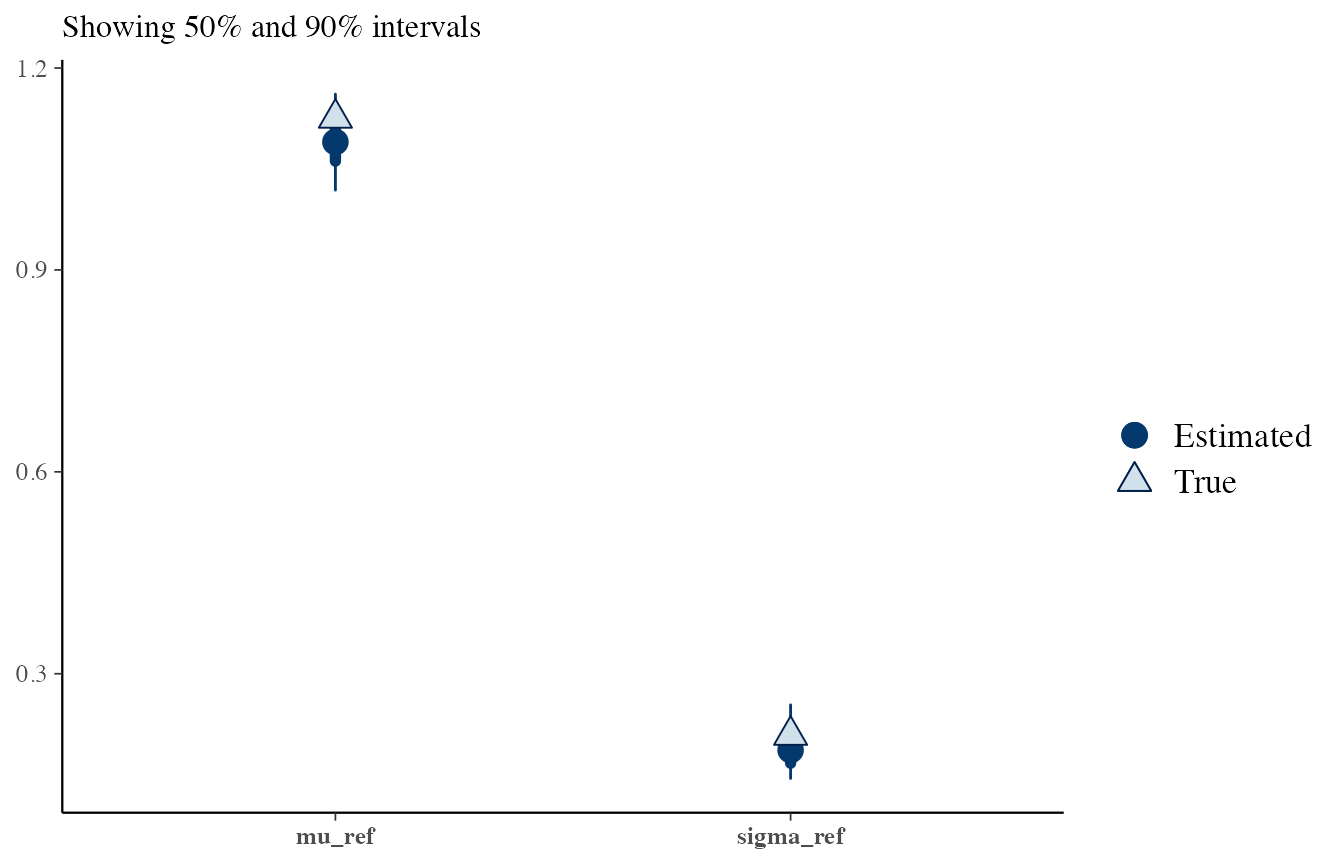

results_same_H2 <- obj_StanBF_same$stanfit$H2 %>% As.mcmc.list()Parameter recovery

First, we recover NUTS draws and true values. Then we use bayesplot tools to plot them.

Notice that the orders of the variables in mcmc_recover_intervals arguments must match.

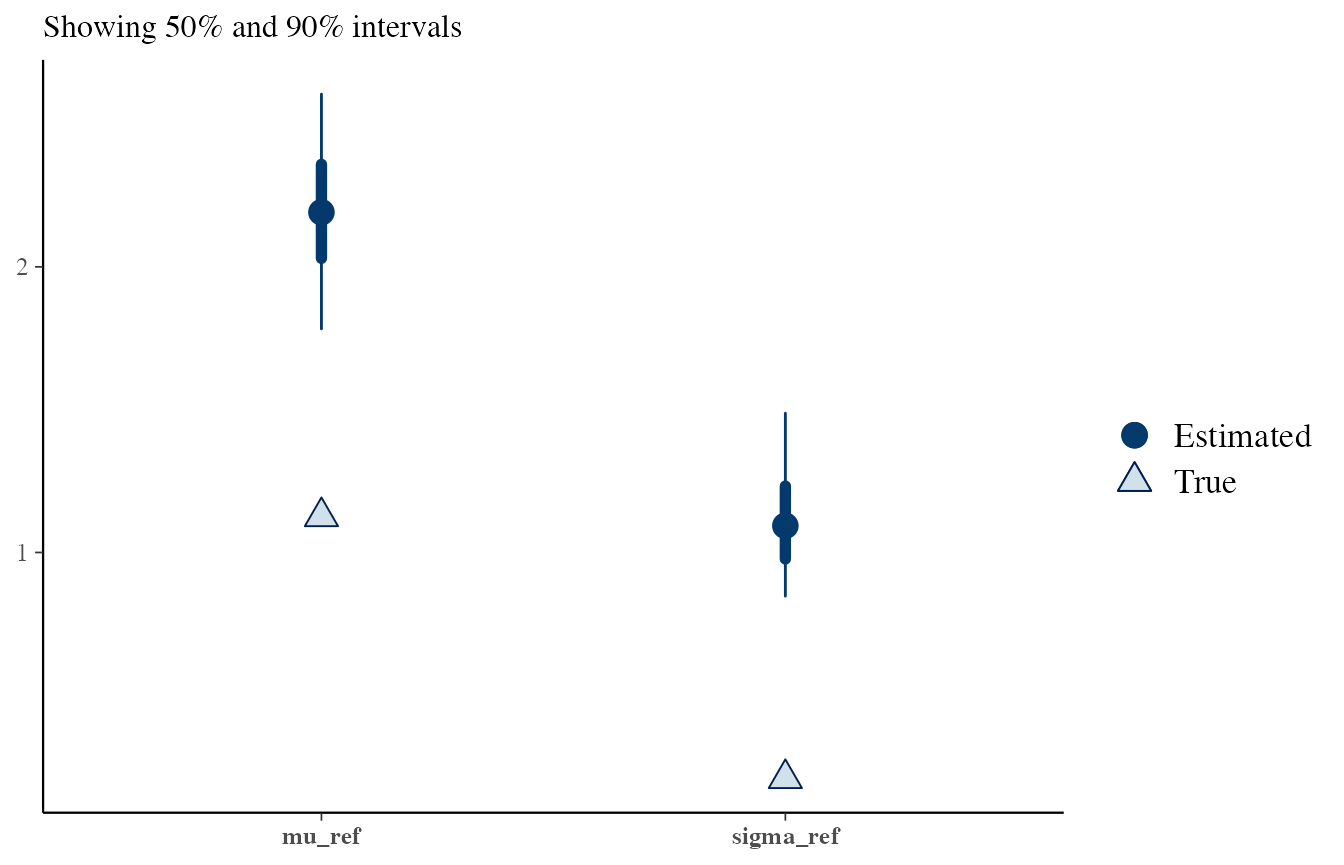

Questioned data sampled under \(H_1\):

# H1

draws <- obj_StanBF_same$stanfit$H1 %>% As.mcmc.list(pars = c('mu_ref', 'sigma_ref'))

df_sources_ref <- list_pop$df_sources %>% filter(source == source_same) %>% select(mu, sigma)

true_sources <- as.numeric(df_sources_ref)

bayesplot::mcmc_recover_intervals(draws, true_sources)

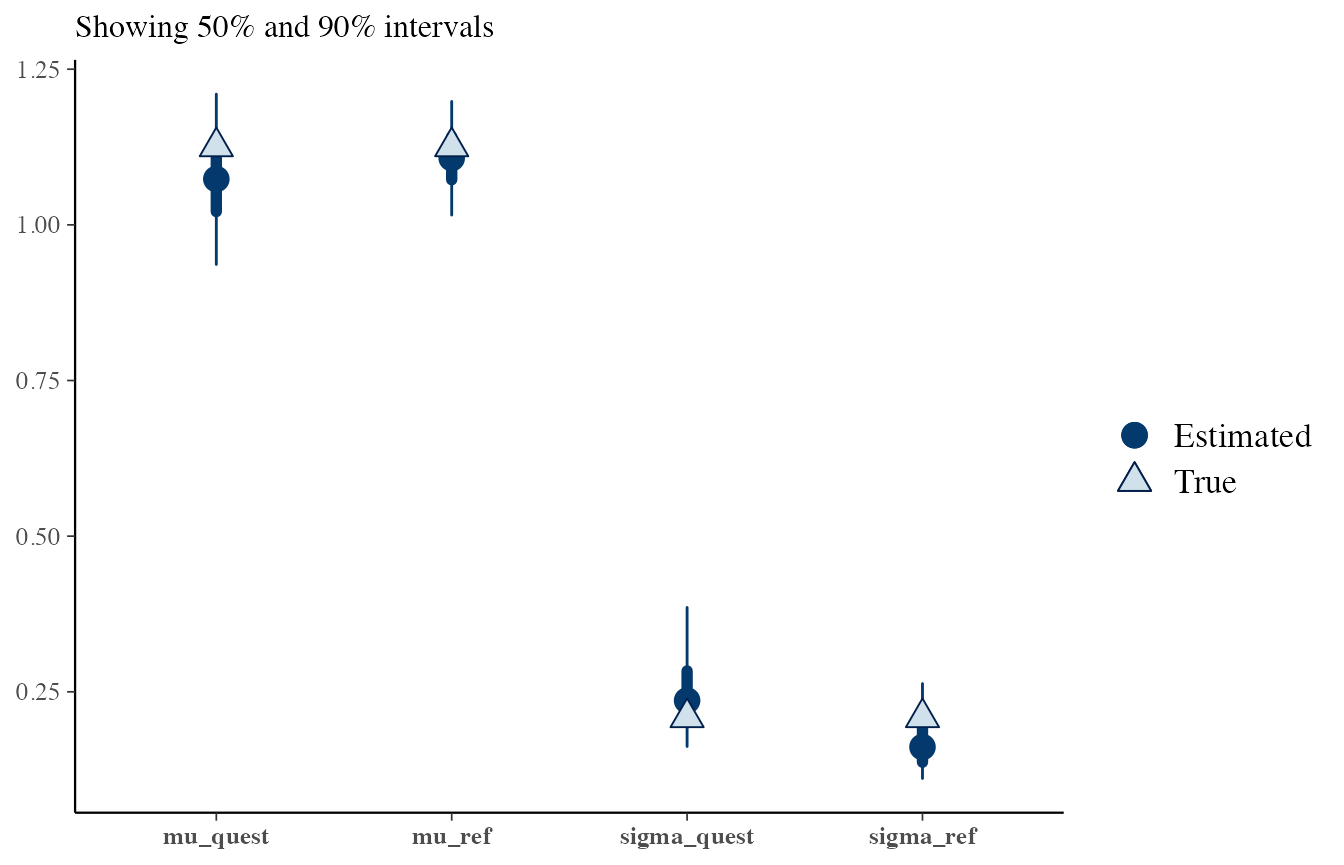

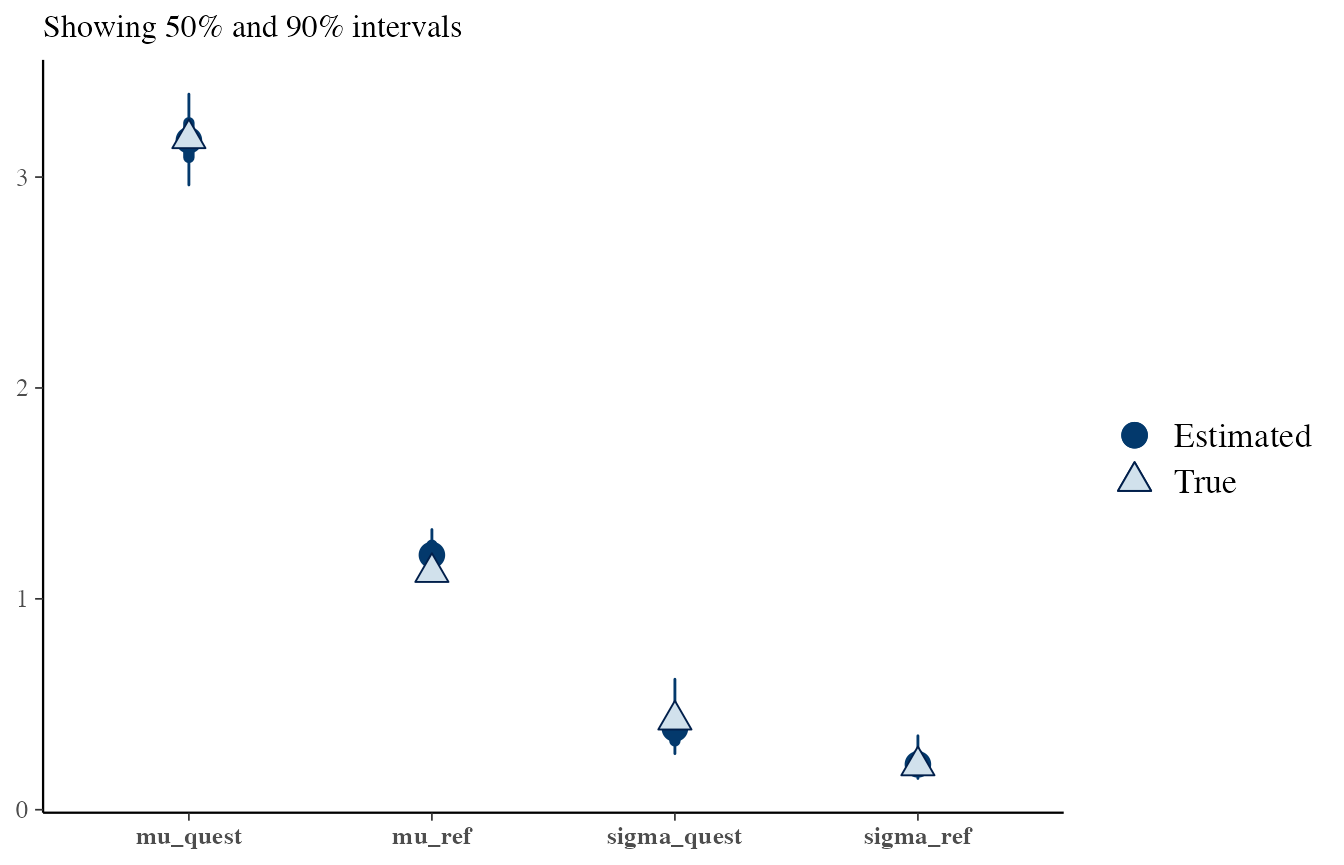

# H2, reference and questioned

draws <- obj_StanBF_same$stanfit$H2 %>% As.mcmc.list(pars = c('mu_ref', 'sigma_ref', 'mu_quest', 'sigma_quest'))

df_sources <- list_pop$df_sources %>% filter(source == source_same) %>% select(mu, sigma)

true_sources <- rep(as.numeric(df_sources), 2)

bayesplot::mcmc_recover_intervals(draws, true_sources)

Questioned data sampled under \(H_2\):

# H1: assuming that sources are the same

# This is not true, so the posteriors do not fit well

draws <- obj_StanBF_diff$stanfit$H1 %>% As.mcmc.list(pars = c('mu_ref', 'sigma_ref'))

df_sources_ref <- list_pop$df_sources %>% filter(source == source_same) %>% select(mu, sigma)

true_sources <- as.numeric(df_sources_ref)

bayesplot::mcmc_recover_intervals(draws, true_sources)

# H2, reference and questioned from different sources

# This is true, the posteriors are also good

draws <- obj_StanBF_diff$stanfit$H2 %>% As.mcmc.list(pars = c('mu_ref', 'sigma_ref', 'mu_quest', 'sigma_quest'))

df_sources_ref <- list_pop$df_sources %>% filter(source == source_same) %>% select(mu, sigma)

df_sources_quest <- list_pop$df_sources %>% filter(source == source_diff) %>% select(mu, sigma)

true_sources <- c(as.numeric(df_sources_ref), as.numeric(df_sources_quest))

bayesplot::mcmc_recover_intervals(draws, true_sources)

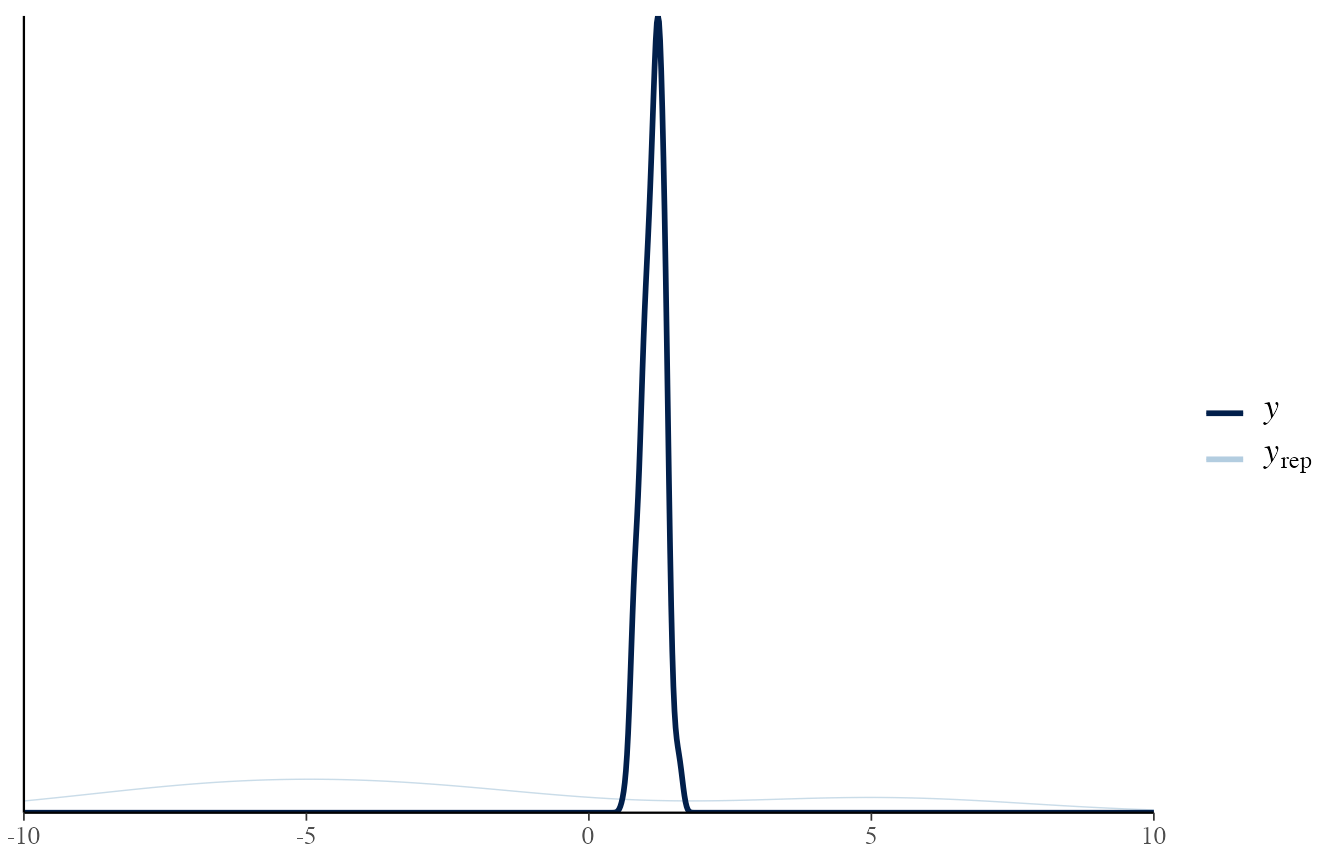

Prior and posterior predictive checks

To simplify, consider only when data is sampled from the same source.

Here, we plot the prior predictive distribution.

It should generate data which should be realistic, but not necessarily adapted to the observed data.

It represents the background knowledge of our data.

Here, we specified a very vague prior, so the generated values are really extreme compared to the one measured.

# H1: prior predictive

draws_prior <- obj_StanBF_same$stanfit$H1 %>% as.matrix(pars = c('sim_d_ref'))

data_ref <- list_pop$df_pop %>% filter(source == source_ref) %>% pull('x')

# extract a subset of draws

draws_prior_sub <- t(as.matrix(draws_prior[1:length(data_ref)]))

bayesplot::ppc_dens_overlay(data_ref, draws_prior_sub) +

scale_x_continuous(limits = c(-10, 10))

#> Warning: Removed 196 rows containing non-finite values (stat_density).

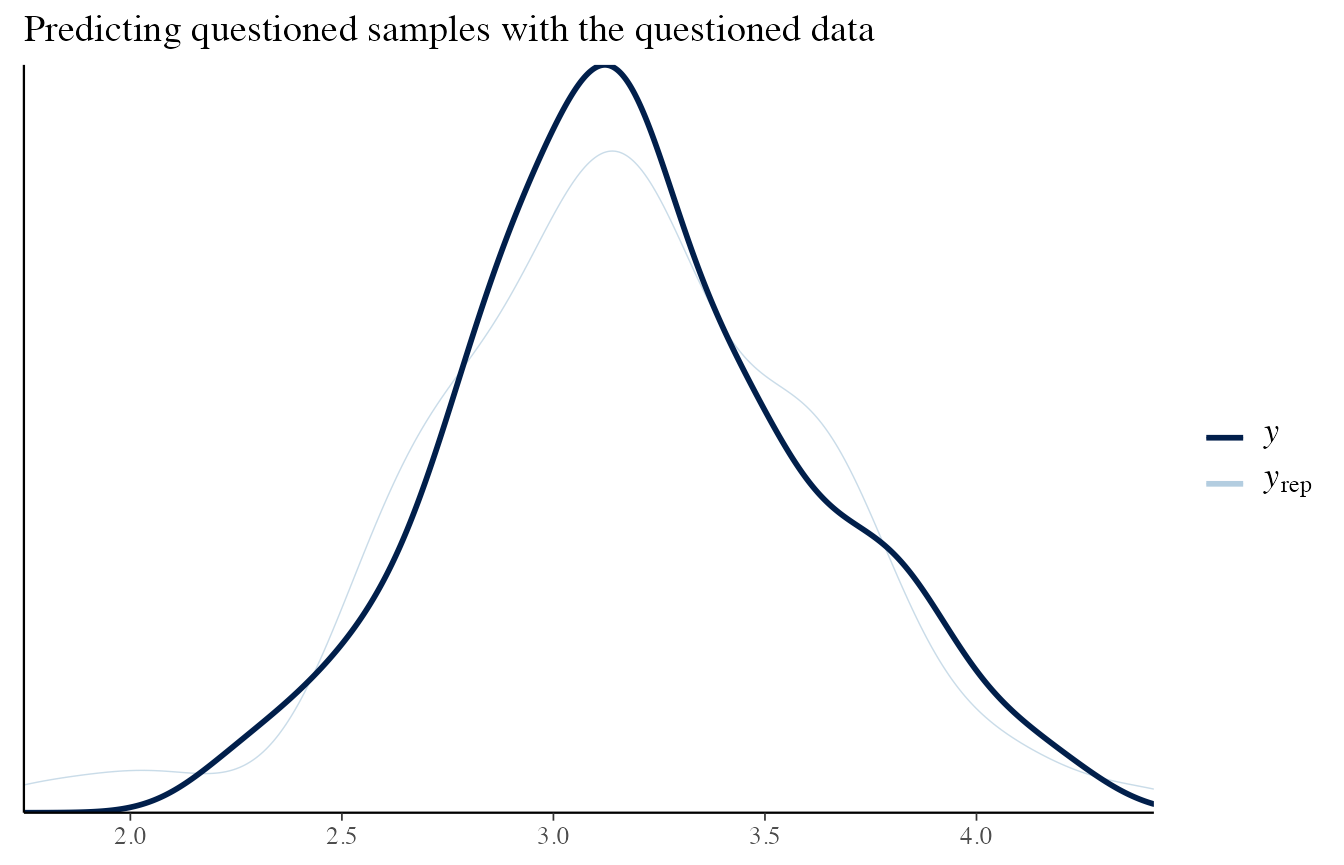

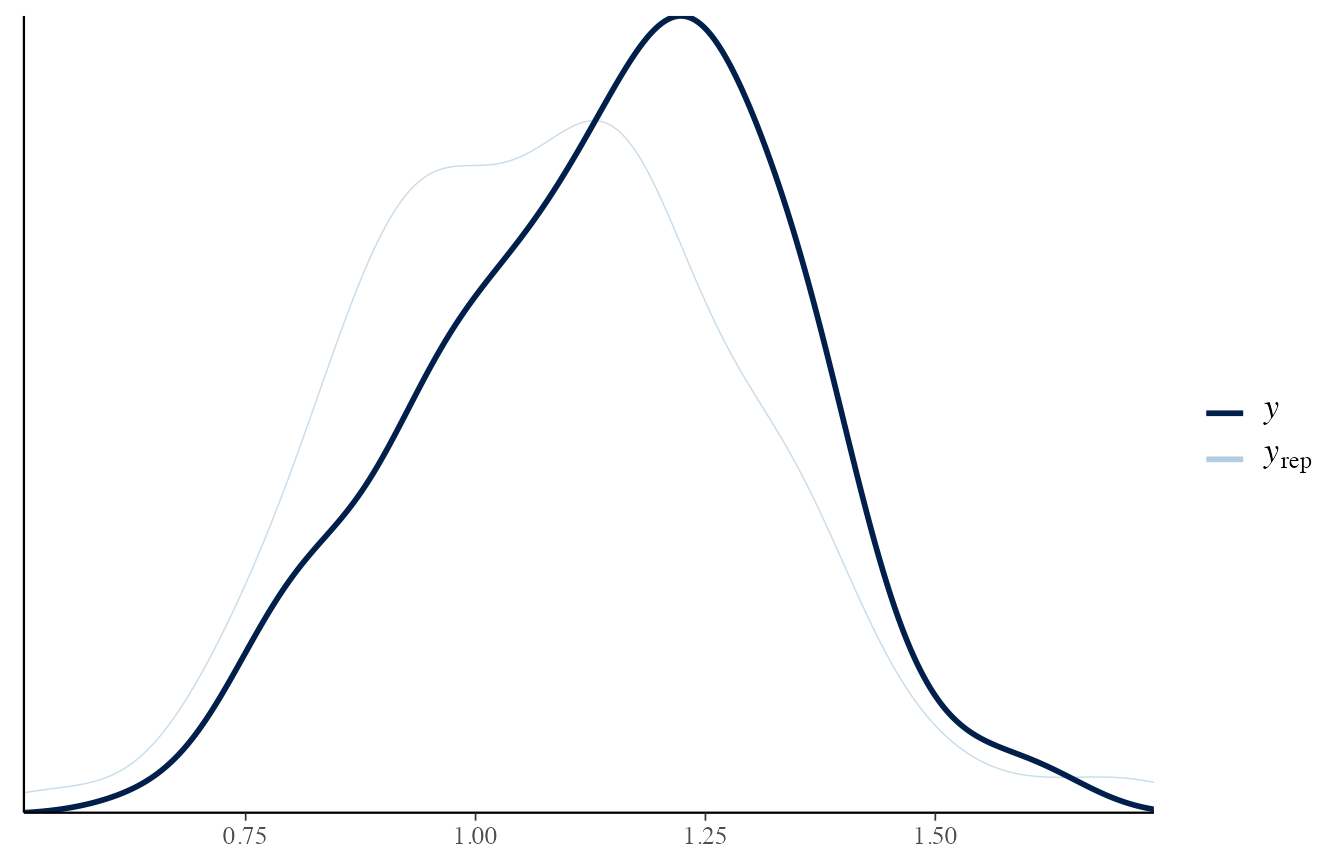

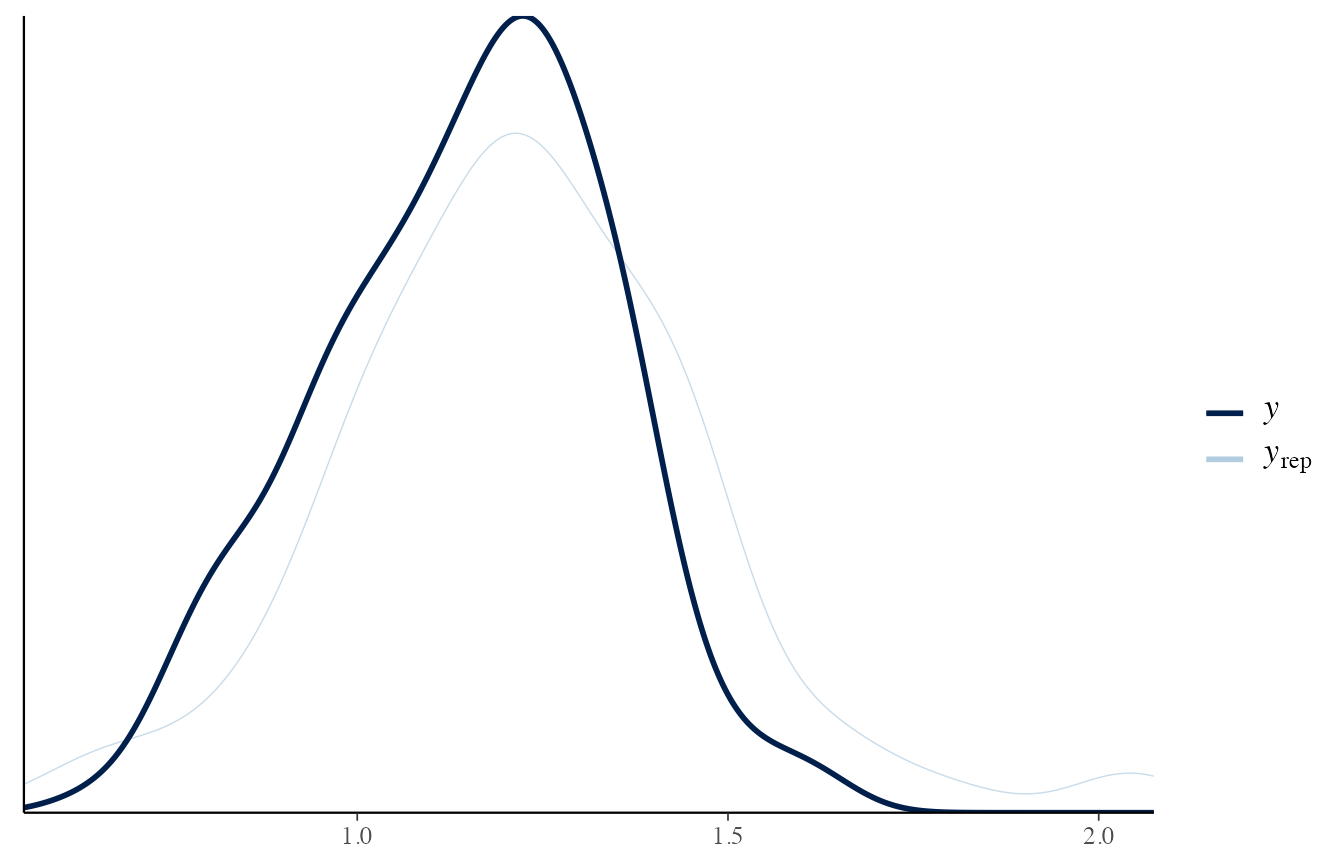

The posterior predictive distribution, instead, should be a good approximation of the observed data.

If it does not occur, it means that the model is not capable of predicting from the correct distribution.

In other words, the trained model is not adapted to the observed data.

draws_posterior <- obj_StanBF_same$stanfit$H1 %>% as.matrix(pars = c('pred_d_ref'))

draws_posterior_sub <- t(as.matrix(draws_posterior[length(draws_posterior) - (1:length(data_ref)) ]))

bayesplot::ppc_dens_overlay(data_ref, draws_posterior_sub)

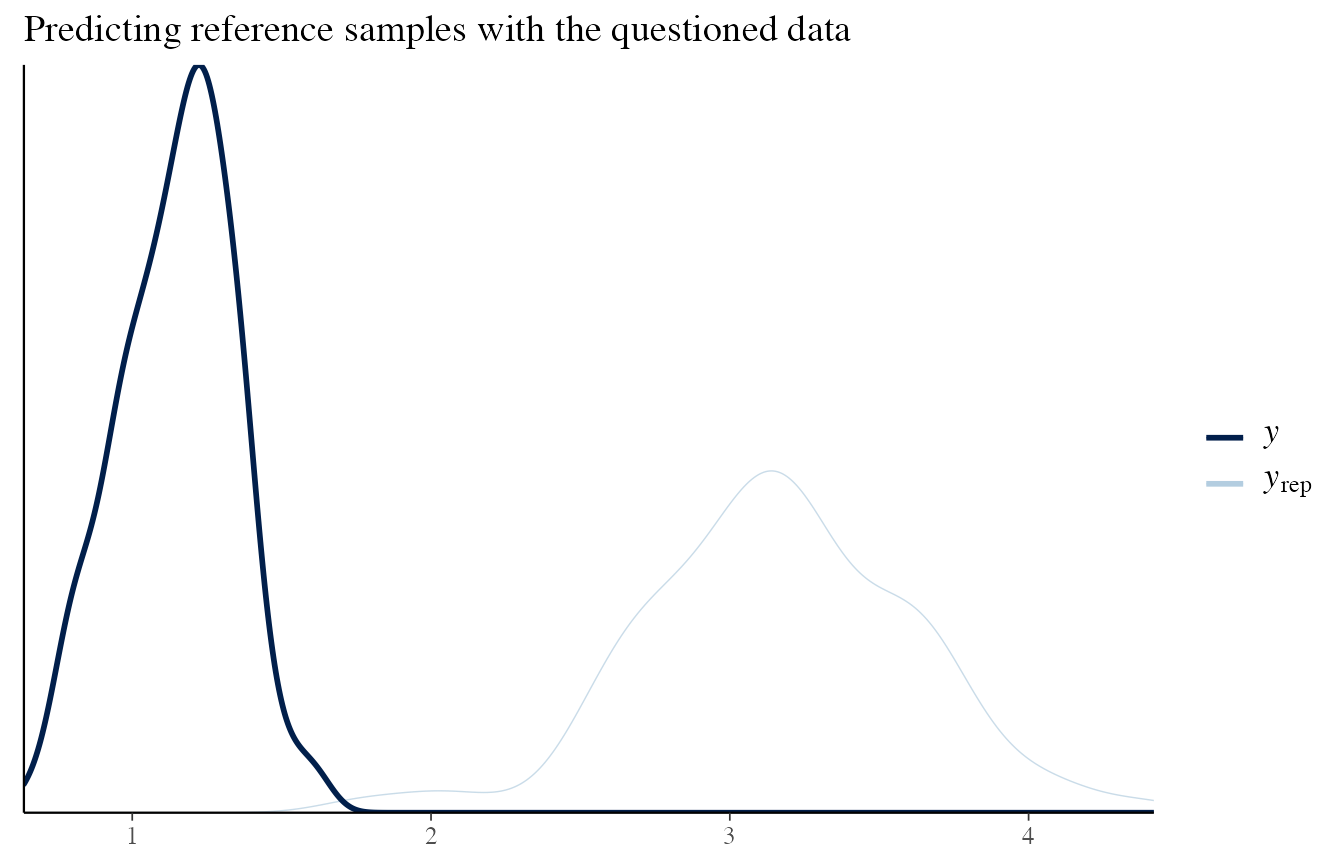

To check that it is working, let’s consider the situation where the sources are different, and consider the reference and questioned items separately.

data_quest <- list_pop$df_pop %>% filter(source == source_diff) %>% pull('x')

# Reference data

draws_posterior <- obj_StanBF_diff$stanfit$H2 %>% as.matrix(pars = c('pred_d_ref'))

draws_posterior_sub <- t(as.matrix(draws_posterior[length(draws_posterior) - (1:length(data_ref)) ]))

bayesplot::ppc_dens_overlay(data_ref, draws_posterior_sub)

# Questioned data

draws_posterior <- obj_StanBF_diff$stanfit$H2 %>% as.matrix(pars = c('pred_d_quest'))

draws_posterior_sub <- t(as.matrix(draws_posterior[length(draws_posterior) - (1:length(data_quest)) ]))

bayesplot::ppc_dens_overlay(data_ref, draws_posterior_sub) + ggtitle('Predicting reference samples with the questioned data')

bayesplot::ppc_dens_overlay(data_quest, draws_posterior_sub) + ggtitle('Predicting questioned samples with the questioned data')